| News and Announcements » |

This tutorial explains how to use the QIIME (Quantitative Insights Into Microbial Ecology) Pipeline to process data from high-throughput 16S rRNA sequencing studies. The purpose of this pipeline is to provide a start-to-finish workflow, beginning with multiplexed sequence reads and finishing with taxonomic and phylogenetic profiles and comparisons of the samples in the study. With this information in hand, it is possible to determine biological and environmental factors that alter microbial community ecology in your experiment.

As an example, we will use data from a study of the response of mouse gut microbial communities to fasting (Crawford et al., 2009). To make this tutorial run quickly on a personal computer, we will use a subset of the data generated from 5 animals kept on the control ad libitum fed diet, and 4 animals fasted for 24 hours before sacrifice. At the end of our tutorial, we will be able to compare the community structure of control vs. fasted animals. In particular, we will be able to compare taxonomic profiles for each sample type, differences in diversity metrics within the samples and between the groups, and perform comparative clustering analysis to look for overall differences in the samples.

To process our data, we will perform the following steps, each of which is described in more detail in the Data Analysis Steps:

All the files you will need for this tutorial are here (http://bmf.colorado.edu/QIIME/qiime_tutorial-v1.2.1.zip). Descriptions of these files are below.

This is the 454-machine generated FASTA file. Using the Amplicon processing software on the 454 FLX standard, each region of the PTP plate will yield a fasta file of form 1.TCA.454Reads.fna, where “1” is replaced with the appropriate region number. For the purposes of this tutorial, we will use the fasta file Fasting_Example.fna.

This is the 454-machine generated quality score file, which contains a score for each base in each sequence included in the FASTA file. Like the fasta file mentioned above, the Amplicon processing software will generate one of these files for each region of the PTP plate, named 1.TCA.454Reads.qual, etc. For the purposes of this tutorial, we will use the quality scores file Fasting_Example.qual.

The mapping file is generated by the user. This file contains all of the information about the samples necessary to perform the data analysis. At a minimum, the mapping file should contain the name of each sample, the barcode sequence used for each sample, the linker/primer sequence used to amplify the sample, and a Description column. In general, you should also include in the mapping file any metadata that relates to the samples (for instance, health status or sampling site) and any additional information relating to specific samples that may be useful to have at hand when considering outliers (for example, what medications a patient was taking at time of sampling). Full format specifications can be found in the Documentation.

You are highly encouraged to validate your mapping file using check_id_map.py before attempting to analyze your data. This tool will check for errors, and make suggestions for other aspects of the file to be edited (errors and warnings are output to a log file, and suggested changes to invalid characters are output to a corrected_mapping.txt file). For the purposes of this tutorial, we will use the mapping file Fasting_Map.txt. The contents of the mapping file are shown here - as you can see, a nucleotide barcode sequence is provided for each of the 9 samples, as well as metadata related to treatment group and date of birth, and general run descriptions about the project. Fasting_Map.txt file contents:

Note

This is the 454-machine generated file which stores the sequencing trace data. This is the largest file returned from a 454 run. The sffinfo command in the 454 software package can be used to generate sequence and quality files from sff file(s) as follows

To generate a fasta file

sffinfo -s NAME_OF_SFF_FILES > OUTPUT_NAME.fna

To generate a quality score file

sffinfo -q NAME_OF_SFF_FILES >OUTPUT_NAME.qual

In this walkthrough, white text on a black background denote the command-line invocation of scripts. You can find full usage information for each script by passing the -h option (help) and/or by reading the full description in the Documentation. First, assemble the sequences (.fna), quality scores (.qual), and metadata mapping file into a directory. Execute all tutorial commands from within the qiime_tutorial directory, which can be downloaded from here.

Filter the reads based on quality, and assign multiplexed reads to starting sample by nucleotide barcode.

Before beginning the pipeline, you should ensure that your mapping file is formatted correctly with the check_id_map.py script.

check_id_map.py -m Fasting_Map.txt -o mapping_output/

If verbose (-v) is enabled, this utility will print to STDOUT a message indicating whether or not problems were found in the mapping file. Errors and warnings will the output to a log file, which will be present in the specified (-o) output directory. Errors will cause fatal problems with subsequent scripts and must be corrected before moving forward. Warnings will not cause fatal problems, but it is encouraged that you fix these problems as they are often indicative of typos in your mapping file, invalid characters, or other unintended errors that will impact downstream analysis. A corrected_mapping.txt file will also be created in the output directory, which will have a copy of the mapping file with invalid characters replaced by underscores, or a message indicating that no invalid characters were found.

The next task is to assign the multiplex reads to samples based on their nucleotide barcode. Also, this step performs quality filtering based on the characteristics of each sequence, removing any low quality or ambiguous reads. The script for this step is split_libraries.py. A full description of parameters for this script are described in the Documentation. For this tutorial, we will use default parameters (minimum quality score = 25, minimum/maximum length = 200/1000, no ambiguous bases allowed and no mismatches allowed in the primer sequence).:

split_libraries.py -m Fasting_Map.txt -f Fasting_Example.fna -q Fasting_Example.qual -o split_library_output

This invocation will create three files in the new directory split_library_output/:

A few lines from the seqs.fna file are shown below:

Note

QIIME includes workflow scripts, which allow multiple tasks to be performed with one command. Within the QIIME directory there is a file qiime_parameters.txt, where the user can set parameters for specific steps within a workflow script. The user should make a copy of qiime_parameters.txt and place it into their working directory and give it a new filename (e.g. custom_parameters.txt), but DO NOT EDIT the original file. If you are using the tutorial dataset, the parameters file custom_parameters.txt is included, which has many parameters already set with appropriate values for the tutorial data. For more information on the qiime_parameters.txt file, please refer to here. In this tutorial, we will utilize the workflow scripts when appropriate and within each section where the workflow is used, we will discuss which options in the custom_parameters.txt file associate to each step within the workflow. Users can run the workflow scripts in parallel by passing “-a” option to each of the scripts, however, this means that if you are running these scripts on a laptop, there must be more than one core in the machine (e.g. Intel duo or quad core).

Here we will be running the pick_otus_through_otu_table.py workflow, which consists of the following steps:

We will first go through each step and define the parameters in custom_parameters.txt and then at the end, we will run this workflow script.

Optionally, we can denoise the sequences based on clustering the flowgram sequences. For a single library/sff file we can simply use the workflow script pick_otus_through_otu_tables.py, by providing the script with the sff file and the metadata mapping file. For multiple sff files refer to the special purpose tutorial Denoising of 454 Data Sets.

At this step, all of the sequences from all of the samples will be clustered into Operational Taxonomic Units (OTUs) based on their sequence similarity. OTUs in QIIME are clusters of sequences, frequently intended to represent some degree of taxonomic relatedness. For example, when sequences are clustered at 97% sequence similarity with uclust, each resulting cluster is typically thought of as representing a genus. This model and the current techniques for picking OTUs are known to be flawed, and determining exactly how OTUs should be defined, and what they represent, is an active area of research. Thus, OTU-picking will identify highly similar sequences across the samples and provide a platform for comparisons of community structure. The script pick_otus.py takes as input the fasta file output from Assign Samples to Multiplex Reads above, and returns a list of OTUs detected and the fasta header for sequences that belong in that OTU. To make the workflow invoke pick_otus.py using uclust to cluster and the default setting of 97% similarity determining an OTU, include the following settings in the custom_parameters.txt file:

Note

Note that tabs/space separate fields, e.g.: pick_otus:similarity 0.97. Many of these parameters are blank, therefore default values are used; however, the user can supply variables when necessary. Once this step in the workflow is run, in the newly created directory wf_da/uclust_picked_otus/, there will be two files. One is seqs.log, which contains information about the invocation of the script. The OTUs will be recorded in the tab-delimited file seqs_otus.txt. The OTUs are arbitrarily named by a number, which is recorded in the first column. The subsequent columns in each line identify the sequence or sequences that belong in that OTU.

Since each OTU may be made up of many sequences, we will pick a representative sequence for that OTU for downstream analysis. This representative sequence will be used for taxonomic identification of the OTU and phylogenetic alignment. The script pick_rep_set.py uses the OTU file created above and extracts a representative sequence from the fasta file by one of several methods. To use the default method, where the most abundant sequence in the OTU is used as the representative sequence, set the parameters in custom_parameters.txt as follows:

Note

In the wf_da/uclust_picked_otus/rep_set/ directory, the script has created two new files - the log file seqs_rep_set.log and the fasta file seqs_rep_set.fasta containing one representative sequence for each OTU. In this fasta file, the sequence has been renamed by the OTU, and the additional information on the header line reflects the sequence used as the representative:

Note

Alignment of the sequences and phylogeny inference is necessary only if phylogenetic tools such as UniFrac will be subsequently invoked. Alignments can either be generated de novo using programs such as MUSCLE, or through assignment to an existing alignment with tools like PyNAST. For small studies such as this tutorial, either method is possible. However, for studies involving many sequences (roughly, more than 1000), the de novo aligners are very slow and assignment with PyNAST is preferred. Either alignment approach is accomplished with the script align_seqs.py. Since this is one of the most computationally intensive bottlenecks in the pipeline, large studies would benefit greatly from parallelization of this task (described in detail in the Documentation): When using PyNAST as an aligner, the user must supply a template alignment and if the user followed the instructions (4.Getting_started_with_QIIME.txt) in the Virtual Machine, then the greengenes files will be located in /home/qiime/. For the tutorial, we will use PyNAST as the alignment method, UCLUST for the pairwise alignment method, a minimum length of 150 and a minimum percent identity of 75.0 in custom_parameters.txt as follows:

Note

A log file and an alignment file are created in the directory wf_da/uclust_picked_otus/rep_set/pynast_aligned_seqs/.

A primary goal of the QIIME pipeline is to assign high-throughput sequencing reads to taxonomic identities using established databases. This will give you information on the microbial lineages found in your samples. Using assign_taxonomy.py, you can compare your OTUs against a reference database of your choosing. For our example, we will set the assignment_method to the RDP classification system and a confidence of 0.8 in custom_parameters.txt. Note: the option “assign_taxonomy:e_value” is commented out, since it is not used for the rdp method and it will cause the parallel version of this workflow to fail.

Note

In the directory wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy, there will be a log file and a text file. The text file contains a line for each OTU considered, with the RDP taxonomy assignment and a numerical confidence of that assignment (1 is the highest possible confidence). For some OTUs, the assignment will be as specific as a bacterial species, while others may be assignable to nothing more specific than the bacterial domain. Below are the first few lines of the text file and the user should note that the taxonomic assignment and confidence numbers from their run may not coincide with the output shown below, due to the RDP classification algorithm:

Note

Before building the tree, one must filter the alignment to removed columns comprised of only gaps. Note that depending on where you obtained the lanemask file from, it will either be named lanemask_in_1s_and_0s.txt or lanemask_in_1s_and_0s. If the user followed the instructions (4.Getting_started_with_QIIME.txt) in the Virtual Machine, then the greengenes files will be located in /home/qiime/. We will also set the allowed gap fraction as 0.999999, remove outliers to False and a threshold of 3.0 in custom_parameters.txt as follows:

Note

A filtered alignment file is created in the directory wf_da/uclust_picked_otus/rep_set/pynast_aligned_seqs/.

The filtered alignment file produced in the directory wf_da/uclust_picked_otus/rep_set/pynast_aligned_seqs/ can be used to build a phylogenetic tree using a tree-building program. As an example, we can set the tree_method to fasttree and the root_method to tree_method_default in custom_parameters.txt.

Note

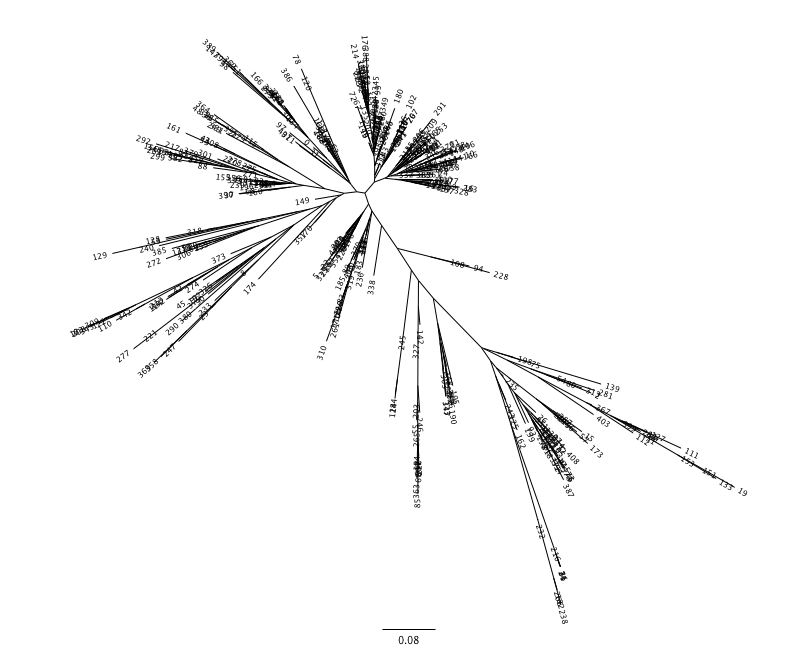

The Newick format tree file is written to seqs_rep_set.tre, which is located in the wf_da/uclust_picked_otus/rep_set/pynast_aligned_seqs/fasttree_phylogeny directory . This file can be viewed in a tree visualization software, and is necessary for UniFrac diversity measurements (described below). For the following example, the FigTree program was used to visualize the phylogenetic tree obtained from seqs_rep_set.tre.

Using these assignments and the OTU file created in Step 1. Pick OTUs based on Sequence Similarity within the Reads, we can make a readable matrix of OTU by Sample with meaningful taxonomic identifiers for each OTU. Currently there are no parameters in custom_parameters.txt for the user to define when making an OTU table.

The result of this step is seqs_otu_table.txt, which is located in the wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/ directory. The first few lines of seqs_otu_table.txt are shown below (OTUs 1-9), where the first column contains the OTU number, the last column contains the taxonomic assignment for the OTU, and 9 columns between are for each of our 9 samples. The value of each ij entry in the matrix is the number of times OTU i was found in the sequences for sample j.

Note

Now that we have set the parameters necessary for this workflow script, the user can run the following command, where we define the input sequence file “-i” (from split_libraries.py), the parameter file to use “-p” and the output directory “-o”:

pick_otus_through_otu_table.py -i split_library_output/seqs.fna -p custom_parameters.txt -o wf_da

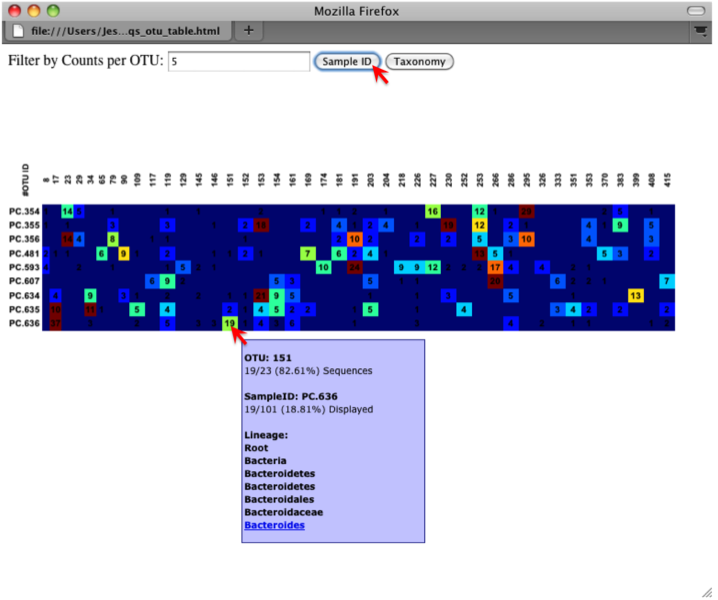

The QIIME pipeline includes a very useful utility to generate images of the OTU table. The script is make_otu_heatmap_html.py

make_otu_heatmap_html.py -i wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/seqs_otu_table.txt -o wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/OTU_Heatmap/

An html file is created in the directory “wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/Fasting_OTU_Heatmap/”. You can open this file with any web browser, and will be prompted to enter a value for “Filter by Counts per OTU”. Only OTUs with total counts at or above this threshold will be displayed. The OTU heatmap displays raw OTU counts per sample, where the counts are colored based on the contribution of each OTU to the total OTU count present in that sample (blue: contributes low percentage of OTUs to sample; red: contributes high percentage of OTUs). Click the “Sample ID” button, and a graphic will be generated like the figure below. For each sample, you will see in a heatmap the number of times each OTU was found in that sample. You can mouse over any individual count to get more information on the OTU (including taxonomic assignment). Within the mouseover, there is a link for the terminal lineage assignment, so you can easily search Google for more information about that assignment.

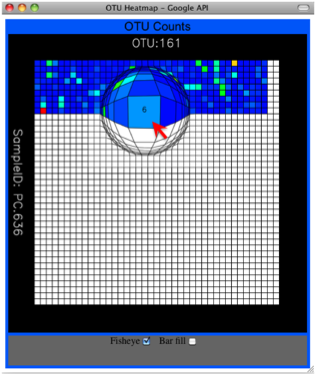

Alternatively, you can click on one of the counts in the heatmap and a new pop-up window will appear. The pop-up window uses a Google Visualization API called Magic-Table. Depending on which table count you clicked on, the pop-up window will put the clicked-on count in the middle of the pop-up heatmap as shown below. For the following example, the table count with the red arrow mouseover is the same one being focused on using the Magic-Table.

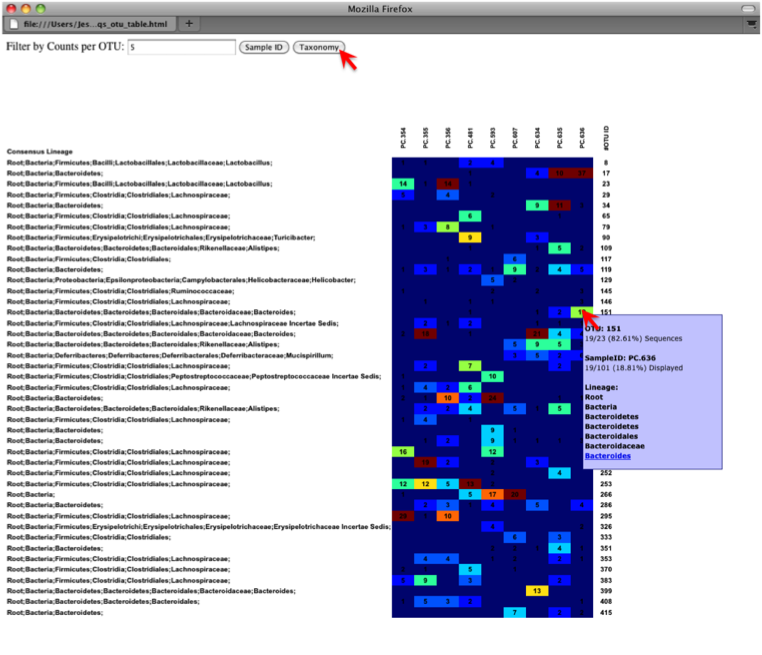

On the original heatmap webpage, if you select the “Taxonomy” button instead, you will generate a heatmap keyed by taxon assignment, which allows you to conveniently look for organisms and lineages of interest in your study. Again, mousing over an individual count will show additional information for that OTU and sample.

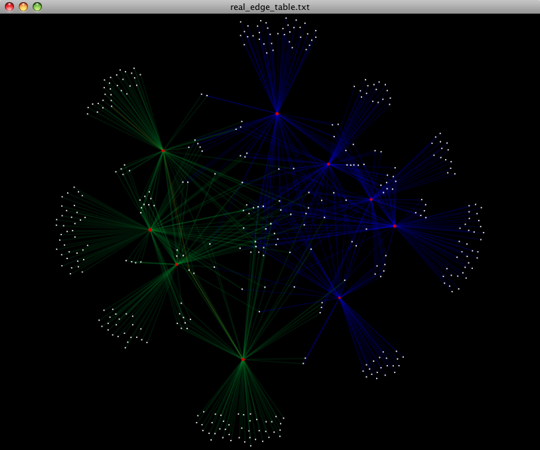

An alternative to viewing the OTU table as a heatmap is to create an OTU network, using the following command.:

make_otu_network.py -m Fasting_Map.txt -i wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/seqs_otu_table.txt -o wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/OTU_Network

To visualize the network, we use the Cytoscape program (which you can run by calling cytoscape from the command line – you may need to call this beginning either with a capital or lowercase ‘C’ depending on your version of Cytoscape), where each red circle represents a sample and each white square represents an OTU. The lines represent the OTUs present in a particular sample (blue for controls and green for fasting). For more information about opening the files in Cytoscape please refer here.

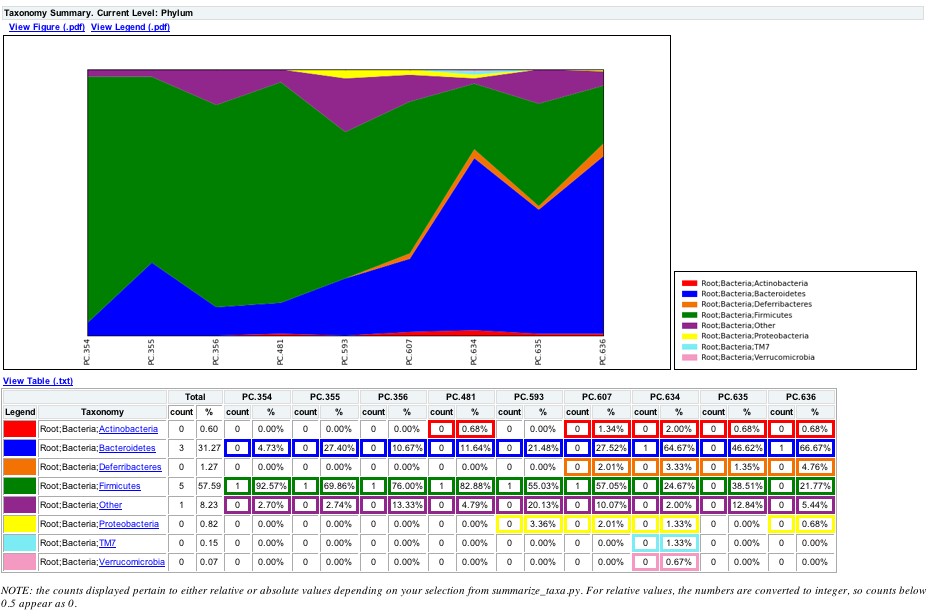

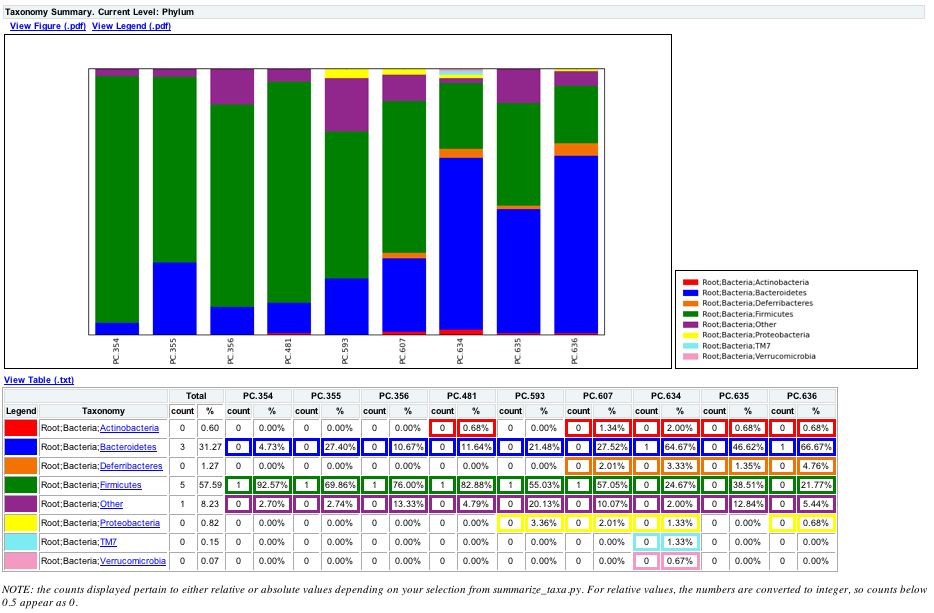

You can group OTUs by different taxonomic levels (division, class, family) with the script summarize_taxa.py. The input is the OTU table created above and the taxonomic level you need to group the OTUs. For the RDP taxonomy, the following taxonomic levels correspond to: 2 = Domain (Bacteria), 3 = Phylum (Actinobacteria), 4 = Class, and so on.

summarize_taxa.py -i wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/seqs_otu_table.txt -o wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/otu_table_Level3.txt -L 3 -r 0

The script will generate a new OTU table wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/otu_table_Level3.txt, where the value of each ij entry in the matrix is the count of the number of times all OTUs belonging to the taxon i (for example, Phylum Actinobacteria) were found in the sequences for sample j.

Note

To visualize the summarized taxa, you can use the plot_taxa_summary.py script, which shows which taxons are present in all samples. To use this script, we need to set the taxonomy level label “-l”, an output directory “-o”, and the background color “-k” as white:

plot_taxa_summary.py -i wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/otu_table_Level3.txt -l Phylum -o wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/Taxa_Charts -k white

To view the resulting charts, open the area or bar chart html file located in the wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/Taxa_Charts/ folder. The following chart shows the taxa assignments for each sample as an area chart. Users can mouseover the plot to see which taxa are contributing to the percentage shown.

The following chart shows the taxa assignments for each sample as a bar chart.

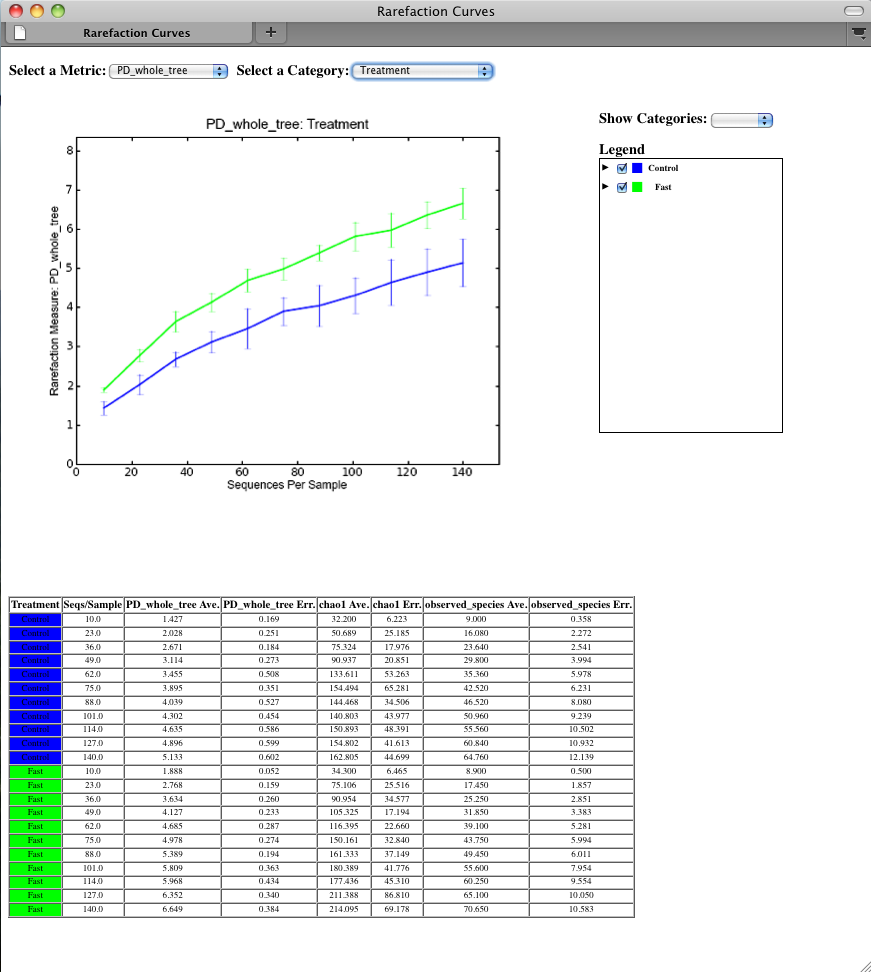

Community ecologists typically describe the microbial diversity within their study. This diversity can be assessed within a sample (alpha diversity) or between a collection of samples (beta diversity). Here, we will determine the level of alpha diversity in our samples using a series of scripts from the QIIME pipeline. To perform this analysis, we will use the alpha_rarefaction.py workflow script. We will first set the parameters in custom_parameters.txt, then at the end, we will run the script. This script performs the following steps:

For this highly artificial example, all of the samples had sequence counts between 146 and 150, which is discussed in more detail in Step 1. Rarify OTU Table to Remove Sample Heterogeneity (Optional). In real datasets, the range will generally be much larger. In practice, rarefaction is most useful when most samples have the specified number of sequences, so your upper bound of rarefaction should be close to the minimum number of sequences found in a sample. For the this workflow script the min/max values are defined by the workflow script. If the user would like to define their own values, they should perform each step individually. In custom_parameters.txt, the user can define the number of iterations at each sequence/sample level, where we will use “num-rep 5” and whether to include lineages, which we set to False:

Note

The directory wf_arare/rarefaction/ will contain many text files named rarefaction_##_#.txt; the first set of numbers represents the number of sequences sampled, and the last number represents the iteration number. If you opened one of these files, you would find an OTU table where for each sample the sum of the counts equals the number of samples taken.

The rarefaction tables are the basis for calculating diversity metrics, which reflect the diversity within the sample based on taxon counts of phylogeny. The QIIME pipeline allows users to conveniently calculate more than two dozen different diversity metrics. The full list of available metrics is available here. Every metric has different strengths and limitations - technical discussion of each metric is readily available online and in ecology textbooks, but it is beyond the scope of this document. Here, we will calculate three metrics:

In the custom_parameters.txt file, the user can define a comma-delimited list of alpha diversity metrics to use, as follows:

Note

The result of this step produces several text files, located in the wf_arare/alpha_div/ directory.

The output directory wf_arare/alpha_div/ will contain one text file alpha_rarefaction_##_# for every file input from wf_arare/rarefaction/, where the numbers represent the number of samples and iterations as before. The content of this tab delimited file is the calculated metrics for each sample. To collapse the individual files into a single combined table, the workflow uses the script collate_alpha.py. The user can define an “example_path” in the custom_parameters.txt file, however, for the tutorial, we will leave this blank.

Note

In the newly created directory wf_arare/alpha_div_collated/, there will be one matrix for every diversity metric used in the alpha_diversity.py script. This matrix will contain the metric for every sample, arranged in ascending order from lowest number of sequences per sample to highest. A portion of the observed_species.txt file are shown below:

Note

The script make_rarefaction_plots.py takes a mapping file and any number of rarefaction files generated by collate_alpha.py and uses matplotlib to create rarefaction curves. Each curve represents a sample and can be colored by the sample metadata supplied in the mapping file. In the custom_parameters.txt file, the user can set the image format (i.e. png), resolution (i.e. 75), and background_color (i.e. white) as follows:

Note

This step generates a wf_arare/alpha_rarefaction_plots/average_tables/ folder, which contains the rarefaction averages for each diversity metric, so the user can plot the rarefaction curves in another application, like MS Excel. The wf_arare/alpha_rarefaction_plots/average_plots/ folder contains the average plots for each metric and category and the wf_arare/alpha_rarefaction_plots/html_plots/ folder contains all the images used in the html page generated. To view the rarefaction plots the user can open the file wf_arare/alpha_rarefaction_plots/rarefaction_plots.html in a browser. Once the browser window is open, the user can select the metric and category for whichever rarefaction plots they would like to display. The user can also turn on/off lines in the plot by (un)checking the box next to each label in the legend. The user can click on the triangle next to each label in the legend to see all the samples that contribute to that category. Below each plot, the user will see the average data over all metrics for the specified category.

Now that we have set the parameters, necessary for this workflow script, the user can run the following command, where we define the input OTU table “-i” and tree file “-t” (from pick_otus_through_otu_table.py), the parameter file to use “-p”, and the output directory “-o”:

alpha_rarefaction.py -i wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/seqs_otu_table.txt -m Fasting_Map.txt -o wf_arare/ -p custom_parameters.txt -t wf_da/uclust_picked_otus/rep_set/pynast_aligned_seqs/fasttree_phylogeny/seqs_rep_set.tre

Here we will be running the beta_diversity_through_3d_plots.py workflow, which consists of the following steps:

To remove sample heterogeneity, we can perform rarefaction on our OTU table. Rarefaction is an ecological approach that allows users to standardize the data obtained from samples with different sequencing efforts, and to compare the OTU richness of the samples using this standardized platform. For instance, if one of your samples yielded 10,000 sequence counts, and another yielded only 1,000 counts, the species diversity within those samples may be much more influenced by sequencing effort than underlying biology. The approach of rarefaction is to randomly sample the same number of OTUs from each sample, and use this data to compare the communities at a given level of sampling effort.

To perform rarefaction, you need to set the boundaries for sampling and the step size between sampling intervals. You can find the number of sequences associated with each sample by looking in the split_library_log.txt file generated in Assign Samples to Multiplex Reads above. The line from our tutorial is pasted here:

Note

Since we are only removing sample heterogeneity from the OTU table, we will use the “-e” option, which only requires the depth of sampling. Rarefaction is most useful when most samples have the specified number of sequences, so your upper bound of rarefaction should be close to the minimum number of sequences found in a sample. For this case, we will set the depth to 146.

Beta-diversity metrics assess the differences between microbial communities. In general, these metrics are calculated to study diversity along an environmental gradient (pH or temperature) or different disease states (lean vs. obese). The basic output of this comparison is a square matrix where a “distance” is calculated between every pair of samples reflecting the similarity between the samples. The data in this distance matrix can be visualized with clustering analyses, namely Principal Coordinate Analysis (PCoA) and UPGMA clustering. Like alpha diversity, there are many possible metrics which can be calculated with the QIIME pipeline - the full list of options can be found here. For our example, we will calculate weighted and unweighted unifrac, which are phylogenetic measures used extensively in recent microbial community sequencing projects, by defining the metric parameter in the custom_parameters.txt file, as follows:

Note

The resulting distance matrices ( wf_bdiv_even146/unweighted_unifrac_seqs_otu_table.txt and wf_bdiv_even146/weighted_unifrac_seqs_otu_table.txt) are the basis for two methods of visualization and sample comparison: PCoA and UPGMA.

Principal Coordinate Analysis (PCoA) is a technique that helps to extract and visualize a few highly informative gradients of variation from complex, multidimensional data. This is a complex transformation that maps the distance matrix to a new set of orthogonal axes such that a maximum amount of variation is explained by the first principal coordinate, the second largest amount of variation is explained by the second principal coordinate, etc. The principal coordinates can be plotted in two or three dimensions to provide an intuitive visualization of the data structure and look at differences between the samples, and look for similarities by sample category. The transformation is accomplished with the script principal_coordinates.py. Since this script only takes an input/output file, there are no parameters for the user to set in custom_parameters.txt.

The files wf_bdiv_even146/unweighted_unifrac_pc.txt and wf_bdiv_even146/weighted_unifrac_pc.txt lists every sample in the first column, and the subsequent columns contain the value for the sample against the noted principal coordinate. At the bottom of each Principal Coordinate column, you will find the eigenvalue and percent of variation explained by the coordinate. To determine which axes are useful for your project, you can generate a “scree plot” by plotting the eigenvalues of each principal component in descending order.

In order to generate the PCoA plots, we want to generate a preferences file, which defines the colors for each of the samples or for a particular category within a mapping column. For more information on making a preferences file, please refer to make_prefs_file.py. In the custom_parameters.txt file, the user can set the background color to be used for the 3D PCoA plot (either black or white), the mapping header categories to plot (uses ALL if left blank) and the monte carlo distance to use (this is for make_distance_histograms.py, which we will do in a few steps).

Note

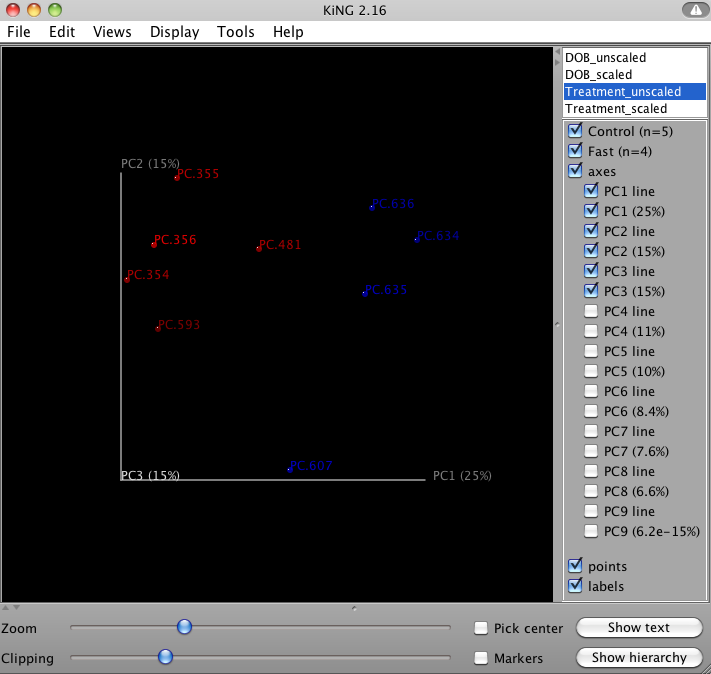

To plot the coordinates, you can use the QIIME scripts make_2d_plots.py and make_3d_plots.py. The two dimensional plot will be rendered as a html file which can be opened with a standard web browser, while the three dimensional plot will be a kinemage file which requires additional software to render and manipulate. The usage for both scripts use the same convention, detailed in make_3d_plots.py. Since the coloring was set for the preferences file parameters, we only need to set the custom_axes in the custom_parameters.txt, although we can leave it blank, as follows:

Note

The html files are created in wf_bdiv_even146/unweighted_unifrac_3d... and wf_bdiv_even146/weighted_unifrac_3d... directories. In the custom_parameters.txt, we specified that the samples should be colored by the value of the “Treatment” and “DOB” columns under the make_prefs_file parameters. For the “Treatment” column, all samples with the same “Treatment” will get the same color. For our tutorial, the five control samples are all blue and the four control samples are all green. This lets you easily visualize “clustering” by metadata category. The 3d visualization software allows you to rotate the axes to see the data from different perspectives. By default, the script will plot the first three dimensions in your file. Other combinations can be viewed using the “Views:Choose viewing axes” option in the KiNG viewer (may require the installation of kinemage software). The first 10 components can be viewed using “Views:Paralleled coordinates” option or typing “/”.

Now that we have set the parameters, necessary for this workflow script, the user can run the following command, where we define the input OTU table “-i” and tree file “-t” (from pick_otus_through_otu_table.py), the parameter file to use “-p”, the user-defined mapping file “-m”, the output directory “-o” and set the sequences per sample depth to 146.

beta_diversity_through_3d_plots.py -i wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/seqs_otu_table.txt -m Fasting_Map.txt -o wf_bdiv_even146/ -p custom_parameters.txt -t wf_da/uclust_picked_otus/rep_set/pynast_aligned_seqs/fasttree_phylogeny/seqs_rep_set.tre -e 146

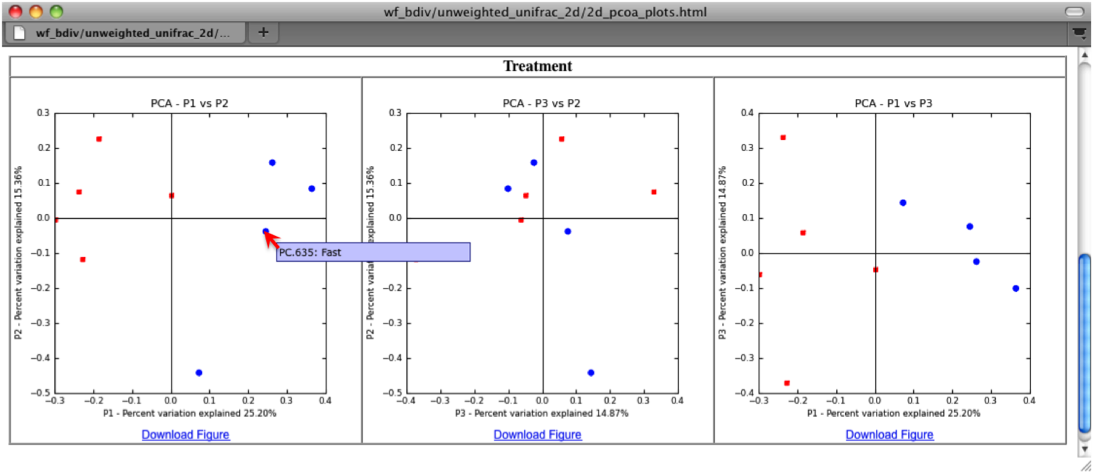

To plot the coordinates for the unweighted unifrac principal coordinates in 2D, you can use the QIIME script make_2d_plots.py. Here we will use the same preferences file generated from the beta_diversity_through_3d_plots.py, set the background color “-k” to white and output the results to wf_bdiv_even146/unweighted_unifrac_2d:

make_2d_plots.py -i wf_bdiv_even146/unweighted_unifrac_pc.txt -m Fasting_Map.txt -o wf_bdiv_even146/unweighted_unifrac_2d -k white -p wf_bdiv_even146/prefs.txt

The html file created in directory wf_bdiv_even146/unweighted_unifrac_2d shows a plot for each combination of the first three principal coordinates. Since we specified Treatment and DOB to use for coloring the samples, each sample colored according to the category it corresponds. You can get the name for each sample by holding your mouse over the data point.

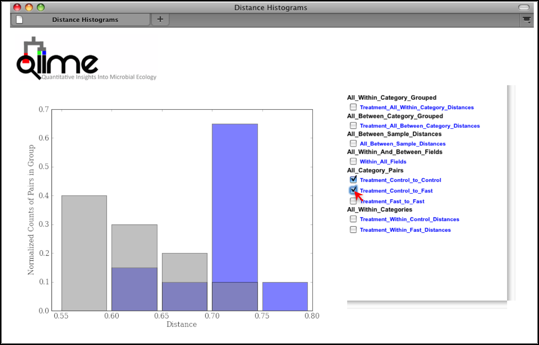

Distance Histograms are a way to compare different categories and see which tend to have larger/smaller distances than others. For example, in the hand study, you may want to compare the distances between hands to the distances between individuals. Here we will use the distance matrix and prefs file generated by beta_diversity_through_3d_plots.py, the mapping file, an output directory wf_bdiv_even146/Distance_Histograms and write the output as html, as follows:

make_distance_histograms.py -d wf_bdiv_even146/unweighted_unifrac_seqs_otu_table_even146.txt -m Fasting_Map.txt -o wf_bdiv_even146/Distance_Histograms -p wf_bdiv_even146/prefs.txt --html_output

For each of these groups of distances a histogram is made. The output is a HTML file (wf_bdiv_even146/Distance_Histograms/QIIME_Distance_Histograms.html) where you can look at all the distance histograms individually, and compare them between each other. Within the webpage, the user can mouseover and/or select the checkboxes in the right panel to turn on/off the different distances within/between categories. For this example, we are comparing the distances between the samples in the Control versus themselves, along with samples from Fasting versus the Control.

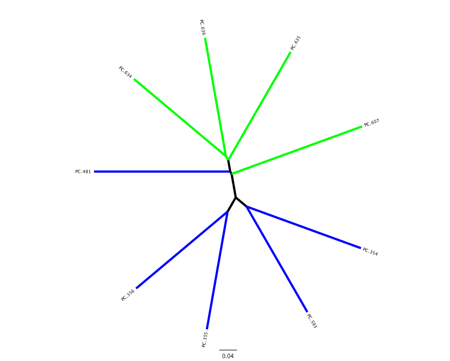

Unweighted Pair Group Method with Arithmetic mean (UPGMA) is type of UPGMA clustering method using average linkage and can be used to visualize the distance matrix produced by beta_diversity.py.

The output is a file that can be opened with tree viewing software, such as FigTree.

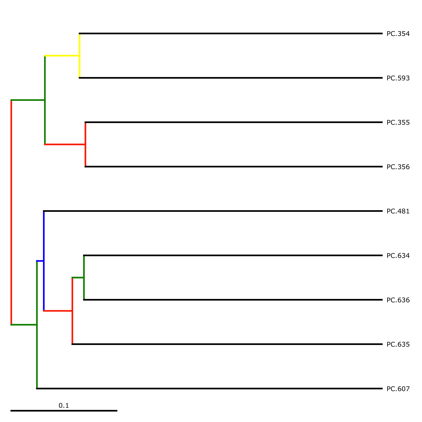

This tree shows the relationship among the 9 samples, and reveals that the 4 samples from the guts of fasting mice cluster together (PC.6xx, fasting data is in Fasting_Map.txt).

To measure the robustness of this result to sequencing effort, we perform a jackknifing analysis, wherein a smaller number of sequences are chosen at random from each sample, and the resulting UPGMA tree from this subset of data is compared with the tree representing the entire available data set. This process is repeated with many random subsets of data, and the tree nodes which prove more consistent across jackknifed datasets are deemed more robust.

First the jackknifed OTU tables must be generated, by subsampling the full available data set. In this tutorial, each sample contains between 146 and 150 sequences, as shown in the split_library_log.txt file:

Note

To ensure that a random subset of sequences is selected from each sample, we chose to select 110 sequences from each sample (75% of the smallest sample, though this value is only a guideline), which is designated by the “-e” option when running the workflow script (see below). In the custom_parameters.txt file, we set the number of jackknife replicates as follows:

Note

This generates 20 subsets of the available data, each subset a simulation of a smaller sequencing effort (110 sequences in each sample, as defined below).

We then calculate the distance matrix for each jackknifed dataset, using beta_diversity.py as before, but now in batch mode, which results in 20 distance matrix files written to the wf_jack/unweighted_unifrac/rare_dm/ and wf_jack/weighted_unifrac/rare_dm/ directories. Each of those is then used as the basis for UPGMA clustering, using upgma_cluster.py in batch mode and written to the wf_jack/unweighted_unifrac/rare_upgma/ and wf_jack/weighted_unifrac/rare_upgma/ directories.

UPGMA clustering of the 20 distance matrix files results in 20 UPGMA samples clusters, each based on a random sub-sample of the available sequence data. These are then compared to the UPGMA result using all available data.

This compares the UPGMA clustering based on all available data with the jackknifed UPGMA results. Three files are written to wf_jack/unweighted_unifrac/upgma_cmp/ and wf_jack/weighted_unifrac/upgma_cmp/:

- master_tree.tre, which is virtually identical to jackknife_named_nodes.tre but each internal node of the UPGMA clustering is assigned a unique name

- jackknife_named_nodes.tre

- jackknife_support.txt explains how frequently a given internal node had the same set of descendant samples in the jackknifed UPGMA clusters as it does in the UPGMA cluster using the full available data. A value of 0.5 indicates that half of the jackknifed data sets support that node, while 1.0 indicates perfect support.

Now that we have set the parameter, necessary for this workflow script, the user can run the following command, where we define the input OTU table “-i” and tree file “-t” (from pick_otus_through_otu_table.py), the parameter file to use “-p”, the output directory “-o” and the number of sequences per sample “-e” (i.e. 100):

jackknifed_beta_diversity.py -i wf_da/uclust_picked_otus/rep_set/rdp_assigned_taxonomy/otu_table/seqs_otu_table.txt -o wf_jack -p custom_parameters.txt -e 110 -t wf_da/uclust_picked_otus/rep_set/pynast_aligned_seqs/fasttree_phylogeny/seqs_rep_set.tre -m Fasting_Map.txt

As an example, we can visualize the bootstrapped tree using unweighted unifrac using make_bootstrapped_tree.py, as follows:

make_bootstrapped_tree.py -m wf_jack/unweighted_unifrac/upgma_cmp/master_tree.tre -s wf_jack/unweighted_unifrac/upgma_cmp/jackknife_support.txt -o wf_jack/unweighted_unifrac/upgma_cmp/jackknife_named_nodes.pdf

The resulting pdf shows the tree with internal nodes colored, red for 75-100% support, yellow for 50-75%, green for 25-50%, and blue for < 25% support. Although UPGMA shows that PC.354 and PC.593 cluster together and PC.481 with PC.6xx cluster together, we can not have high confidence in that result. However, there is excellent jackknife support for all fasted samples (PC.6xx) which are clustering together, separate from the non-fasted (PC.35x) samples.

Users can run the workflow scripts in parallel by passing “-a” option to each of the scripts. In the custom_parameters.txt file, the users can customize the number of jobs to start (i.e. jobs_to_start), whether to keep the temporary files generated (retain_temp_files), and the number of seconds to sleep (seconds_to_sleep). If running on a dual-core computer, you can set the number of jobs to start as 2, as follows:

Note

Now that we have gone through the whole tutorial and customized the custom_parameters.txt file, we can run the shell scripts via the Terminal, which contain all the commands that you ran in this tutorial. To run the shell scripts, you may need to allow all users to execute them, using the following commands:

chmod a+x ./qiime_tutorial_commands_serial.sh

chmod a+x ./qiime_tutorial_commands_parallel.sh

To run the QIIME tutorial in serial:

./qiime_tutorial_commands_serial.sh

To run the QIIME tutorial in parallel:

./qiime_tutorial_commands_parallel.sh

Crawford, P. A., Crowley, J. R., Sambandam, N., Muegge, B. D., Costello, E. K., Hamady, M., et al. (2009). Regulation of myocardial ketone body metabolism by the gut microbiota during nutrient deprivation. Proc Natl Acad Sci U S A, 106(27), 11276-11281.